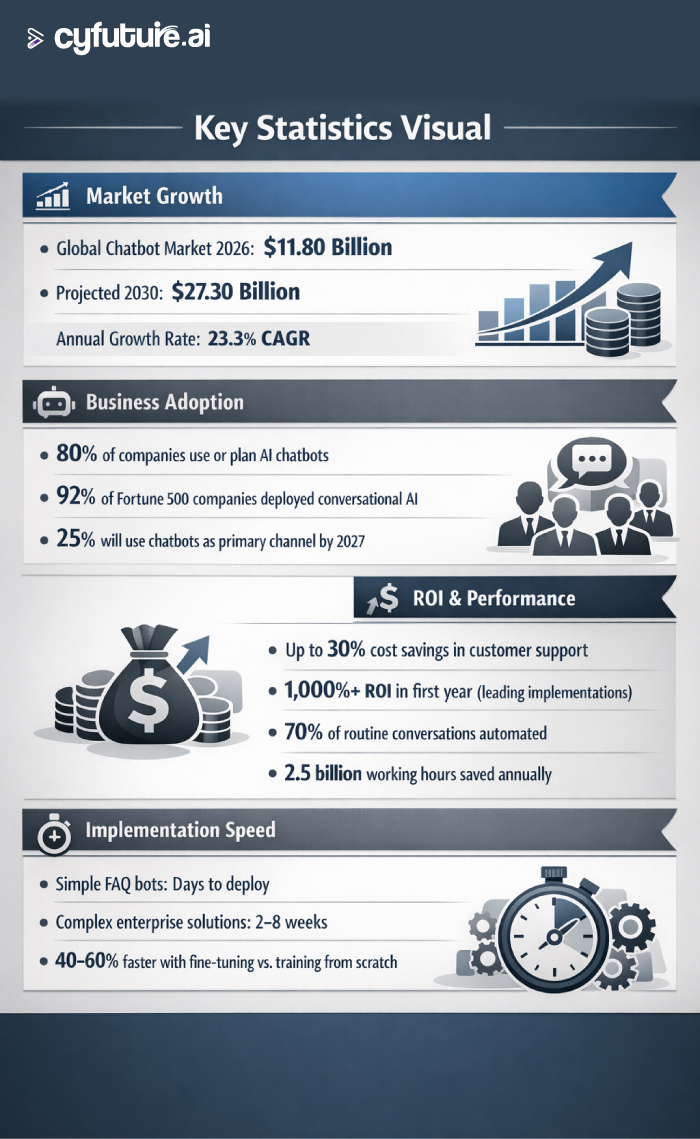

The chatbot revolution isn't coming—it's already here, and it's reshaping business operations at an unprecedented scale. By 2026, the global chatbot market has surged to $11.80 billion, representing a remarkable 23.3% annual growth rate. More striking: 80% of companies now use or plan to implement AI-powered chatbots for customer service, and 92% of Fortune 500 companies have integrated conversational AI into their workflows. The differentiator between chatbots that deliver exceptional ROI and those that frustrate users? The quality and methodology of training on proprietary company data.

Why Training on Company Data Matters

Generic, off-the-shelf chatbots simply cannot compete in today's demanding business environment. According to recent industry analysis, 70% of businesses now focus on integrating internal knowledge into AI systems to ensure their chatbots provide accurate, contextually relevant responses. This strategic shift has proven its worth: companies deploying custom-trained chatbots report up to 30% cost savings in customer support, with some implementations achieving ROI exceeding 1,000% within the first year.

The stakes are high. By 2027, 25% of organizations will use chatbots as their primary customer service channel, handling up to 70% of routine conversations autonomously. Chatbots trained on company-specific data deliver faster response times, improved contextual understanding, and personalized experiences that generic solutions cannot match. For technical leaders evaluating AI implementation strategies, the question isn't whether to train on proprietary data—it's how to do it effectively.

Understanding Chatbot Architecture for Custom Training

Before diving into training methodologies, developers must understand the core components that enable chatbots to learn from company data. Modern AI chatbots integrate Natural Language Processing (NLP) and Machine Learning (ML) to analyze user queries, recognize keywords, and generate contextually appropriate responses. The architecture typically consists of seven critical components:

Natural Language Understanding (NLU): Converts user text and speech into structured data machines can process through tokenization, lemmatization, and entity recognition. This component handles the linguistic complexity of human communication, including ambiguities and context-dependent meanings.

Dialog Manager: Maintains conversation state and tracks interactions to modify responses based on conversational flow. This enables the chatbot to understand when a user says "Change my order to chocolate ice cream" after initially requesting strawberry.

Knowledge Base: Stores company-specific information including product data, policies, FAQs, documentation, and historical interaction patterns. The quality and structure of this data directly impacts chatbot performance.

Natural Language Generation (NLG): Transforms structured machine data into human-readable responses through content determination, text structuring, sentence aggregation, and linguistic realization.

Advanced architectures increasingly leverage Transformer models and BERT (Bidirectional Encoder Representations from Transformers) for superior contextual understanding. These models use attention mechanisms to process entire conversational contexts, not just individual queries.

Preparing Company Data for Training

Data preparation represents the most critical phase of chatbot training, consuming approximately 70% of the total development effort. The quality of training data directly determines model performance—garbage in, garbage out remains an immutable principle in AI development.

Data Collection Strategy

Begin by identifying all relevant data sources within your organization. This includes customer service transcripts, product documentation, technical manuals, FAQ databases, support tickets, internal wikis, email communications, and CRM records. For enterprises, this often means aggregating data from multiple departments and systems.

Modern platforms have simplified this process significantly. According to 2026 implementation data, organizations can now integrate data from Google Drive, Microsoft OneDrive, and other cloud platforms directly into training pipelines. Some no-code platforms enable chatbot training through simple web scraping of company websites, document uploads, or API connections to existing knowledge bases.

Data Cleaning and Preprocessing

Raw data requires extensive preprocessing before training. This involves removing duplicates, correcting errors, normalizing formatting, and eliminating irrelevant information. For text data specifically, apply tokenization to break text into manageable pieces, lemmatization to reduce words to base forms, and stop word removal to filter out common but uninformative words.

Ensure diversity in your training dataset to cover various user query patterns. Research shows that using engagement-oriented training techniques can improve conversation length by up to 70%. Include examples of typical questions phrased in multiple ways, incorporating different linguistic patterns, slang, regional dialects, and even common misspellings.

Data Augmentation Techniques

To increase dataset variety and robustness, implement these augmentation methods:

Paraphrasing: Reword existing texts to create variance while maintaining semantic meaning. This helps the model recognize different ways users might phrase the same query.

Translation: Use translation tools to create multilingual datasets, essential for global enterprises. In 2026, 54% of consumers prefer interacting with chatbots via messaging apps, many in their native languages.

Synthetic Data Generation: Leverage AI models to create realistic training examples. However, exercise caution—as OpenAI CEO Sam Altman noted, while synthetic data can supplement training, over-reliance on it may prove inefficient. Epoch AI research projects that the AI industry may face training data constraints between 2026 and 2032, making high-quality proprietary data increasingly valuable.

The Training Process: Technical Implementation

Model Selection and Architecture

Choosing the appropriate model architecture requires balancing performance requirements, available resources, and deployment constraints. For 2026, options range from rule-based systems for predictable scenarios to advanced Large Language Models (LLMs) for complex, open-ended interactions.

Rule-Based Chatbots: Suitable for specific, well-defined use cases with predictable user intents. These follow decision trees and pattern matching, offering simplicity and complete control but limited flexibility.

Hybrid Chatbots: Combine rule-based logic for frequent queries with NLP capabilities for complex inputs. This approach offers a pragmatic balance for many enterprise applications.

AI-Powered Chatbots with LLMs: Leverage models like GPT-4, Claude, or Llama for sophisticated natural language understanding and generation. These excel at handling nuanced queries and maintaining context across multi-turn conversations.

Cyfuture AI offers comprehensive AI infrastructure services including advanced GPU cloud services and pre-configured AI development environments that dramatically simplify model training. Their platform supports various model architectures with flexible scaling, enabling organizations to train chatbots efficiently without massive upfront infrastructure investments.

Training Methodology

The actual training process involves iteratively adjusting model parameters based on your prepared company data. Key steps include:

Hyperparameter Optimization: Configure learning rate, batch size, and training iterations. These parameters significantly impact model performance and convergence speed. Use techniques like cross-validation and grid search to identify optimal values.

Fine-Tuning Pre-Trained Models: Rather than training from scratch, fine-tune existing models on your company data. This approach leverages the general language understanding of base models while specializing them for your domain. Research indicates this method reduces training time by 40-60% while achieving superior performance.

Continuous Training: Implement feedback loops to continuously improve the model based on real user interactions. Studies show that organizations using continuous improvement methodologies based on user feedback can increase chatbot effectiveness by over 30%.

Handling Challenges

Linguistic Ambiguity: Human communication contains nuance, sarcasm, and context-dependent meanings. Advanced Transformer architectures with contextual embeddings help address this challenge by analyzing entire conversational contexts.

Bias Mitigation: AI models can inadvertently adopt prejudices from training data. Ensure data diversity, implement regular verification processes, and establish clear ethical guidelines. Balance your training data across demographics, use cases, and scenarios.

Scalability: As conversation volumes grow, ensure your infrastructure can scale. According to industry data, contact centers implementing AI chatbots will reduce agent labor costs by $80 billion by 2026 through handling increased volumes without proportional staff increases.

Integration and Deployment

System Integration

Your trained chatbot must integrate seamlessly with existing business systems. This includes CRM platforms (Salesforce, HubSpot), helpdesk software (Zendesk, Freshdesk), messaging channels (WhatsApp, Slack, Microsoft Teams), and internal databases.

Implementation timelines vary by complexity. Simple FAQ bots can deploy in days, while comprehensive multi-channel deployments typically require 2-8 weeks including integration testing. Cyfuture AI's platform enables streamlined integration with major enterprise systems, reducing deployment friction.

Multi-Channel Deployment

Modern customers expect omnichannel support. Data shows 79% of chatbot engagements occur on Facebook Messenger, while over 1.4 billion people globally use messaging apps supporting chatbot access. Deploy your chatbot across relevant channels:

- Website chat widgets for direct customer engagement

- Mobile applications for on-the-go support

- Social media platforms (Facebook, Instagram, Twitter)

- Messaging apps (WhatsApp, Telegram, WeChat)

- Voice interfaces for phone-based interactions

Monitoring and Optimization

Post-deployment monitoring is critical. Track key performance indicators including response accuracy, resolution rate, conversation completion rate, user satisfaction scores, and average handling time.

Implement "Human-in-the-Loop" (HITL) workflows where complex queries escalate to human agents. Research indicates that 95% of customer interactions will be AI-powered by 2025, but maintaining human oversight for edge cases ensures quality and captures training opportunities.

Use A/B testing to evaluate improvements. Deploy model variations to different user segments and measure performance differences. Industry data shows that continuous optimization based on real usage patterns can improve conversion rates by up to 23%.

Security and Compliance Considerations

When training chatbots on company data, security and compliance cannot be afterthoughts. Implement these critical safeguards:

Data Privacy: Ensure training data handling complies with GDPR, CCPA, and other relevant regulations. Remove or anonymize Personally Identifiable Information (PII) from training datasets.

Access Controls: Implement role-based access to chatbot administration and training data. Use multi-factor authentication and audit trails.

Encryption: Encrypt data both in transit and at rest. For sensitive industries like healthcare and finance, ensure end-to-end encryption meets regulatory requirements including HIPAA.

Data Residency: For organizations with data sovereignty requirements, choose providers with appropriate data center locations. Cyfuture AI operates state-of-the-art Tier III data centers strategically located in India, ensuring compliance with local data protection regulations while providing enterprise-grade reliability and security.

Real-World Impact: Success Metrics

Companies implementing custom-trained chatbots report compelling results:

- Cost Reduction: Up to 30% savings on customer support costs, translating to approximately $8 billion in global savings during 2025

- Efficiency Gains: 2.5 billion working hours saved annually through chatbot automation

- Revenue Impact: E-commerce businesses deploying AI chat assistants report 20% increases in average order value within the first week

- Scale: Leading implementations like Octopus Energy now handle 44% of customer inquiries automatically, effectively replacing the workload of approximately 250 support staff members

- Response Time: 30% faster response times through automation, with some implementations achieving sub-40-second first response times

MIT's entrepreneurship program provides a compelling use case: by partnering with a custom AI platform to train a mentorship chatbot on dense datasets, they scaled personalized mentoring across hundreds of students. What previously required scheduling with busy faculty now happens instantly, 24/7.

The Future: Emerging Trends in 2026

As you build your chatbot training strategy, consider these forward-looking developments:

Multimodal AI: By 2027, 40% of generative AI solutions will be multimodal, processing text, images, audio, and video simultaneously. This expands chatbot capabilities beyond text-based interactions.

Autonomous Agents: Enterprise applications increasingly feature task-specific AI agents that can take real-time actions, navigate visual interfaces, and collaborate seamlessly with humans. Gartner predicts that by 2026, 40% of enterprise applications will feature such agents, up from less than 5% in 2025.

Voice Integration: Voice-based chatbots continue growing, driven by consumer comfort with digital assistants and improvements in speech recognition accuracy.

Energy-Efficient Training: Research at institutions like the University of Bonn has developed spiking neuron techniques that dramatically reduce AI training energy consumption, addressing environmental concerns while lowering operational costs.

Conclusion

Training a chatbot on company data represents a strategic investment with measurable returns. The global chatbot market's trajectory—projected to reach $27.3 billion by 2030—reflects widespread recognition of this technology's transformative potential. Success requires methodical data preparation, appropriate architecture selection, rigorous training processes, and continuous optimization.

For technical leaders and developers, the tools and infrastructure needed for effective chatbot training have never been more accessible. Platforms like Cyfuture AI provide enterprise-grade GPU infrastructure, scalable computing resources, and comprehensive AI development environments that eliminate traditional barriers to entry. With their advanced cloud services, organizations can rapidly prototype, train, and deploy chatbots without massive capital investments in on-premises infrastructure.

The competitive advantage belongs to organizations that move decisively. As 95% of customer interactions transition to AI-powered channels, companies delaying implementation face higher costs, steeper learning curves, and customers already accustomed to superior AI experiences elsewhere. The question for 2026 isn't whether to train a chatbot on your company data—it's how quickly you can deploy it effectively.

Frequently Asked Questions

Q: How long does it take to train a chatbot on company data? A: Training timelines vary significantly based on complexity. Simple FAQ bots can be trained and deployed within days using modern no-code platforms. Comprehensive enterprise chatbots with complex integrations typically require 2-8 weeks from data preparation through deployment. However, training should be viewed as continuous—the most effective chatbots undergo ongoing refinement based on user interactions.

Q: What's the minimum amount of data needed to train an effective chatbot? A: While no absolute minimum exists, aim for a diverse dataset covering your primary use cases thoroughly. For rule-based systems, a well-structured knowledge base with 50-100 common queries and responses can suffice. For AI-powered chatbots using machine learning, thousands of conversation examples across various scenarios typically yield better results. Quality matters more than quantity—a well-curated dataset of 1,000 high-quality interactions outperforms 10,000 poorly structured examples.

Q: How do I handle data privacy when training chatbots on sensitive company information? A: Implement a multi-layered approach: First, anonymize all PII in training data through tokenization or removal. Second, use encryption for data in transit and at rest. Third, implement strict access controls with audit trails. Fourth, work with providers offering appropriate data residency—for instance, Cyfuture AI's Tier III data centers in India ensure compliance with local data sovereignty requirements. Finally, establish clear policies for data retention and deletion.

Q: Can I train a chatbot without technical expertise? A: Yes. The landscape has evolved dramatically—2026 offers numerous no-code and low-code platforms that simplify chatbot training for non-technical users. Platforms like Jotform AI Agents, Chatbase, and others provide visual interfaces for uploading data, defining intents, and deploying chatbots without programming. However, for complex enterprise implementations with custom integrations and specialized requirements, technical expertise remains valuable for optimization and troubleshooting.

Author Bio:

Meghali is a tech-savvy content writer with expertise in AI, Cloud Computing, App Development, and Emerging Technologies. She excels at translating complex technical concepts into clear, engaging, and actionable content for developers, businesses, and tech enthusiasts. Meghali is passionate about helping readers stay informed and make the most of cutting-edge digital solutions.