The Memory Revolution in Artificial Intelligence

Were you searching for how AI agents maintain context and memory across conversations? Vector databases have emerged as the foundational infrastructure enabling AI agents to remember, learn, and provide contextually accurate responses by storing and retrieving high-dimensional data in real-time.

Here's the thing:

Traditional AI models are like brilliant individuals with amnesia—they can solve complex problems but can't remember what happened five minutes ago. This fundamental limitation has been the Achilles' heel of artificial intelligence, preventing AI systems from delivering truly personalized, context-aware experiences.

But something transformative is happening.

The vector database market surpassed $2.2 billion in 2024 and is anticipated to expand at around 21.9% CAGR from 2025 to 2034, signaling a massive shift in how AI systems operate. This explosive growth isn't just about technology—it's about fundamentally changing how machines understand and interact with the world.

As Devin Pratt, research director at IDC's AI, automation, data and analytics division, notes: "Retrieval-augmented generation pipelines are important for anchoring LLM outputs to trusted, context-specific data. While vector search finds semantically similar content, RAG frameworks determine which documents actually feed into the model, cutting down on AI hallucinations, and improving answer accuracy."

What is a Vector Database?

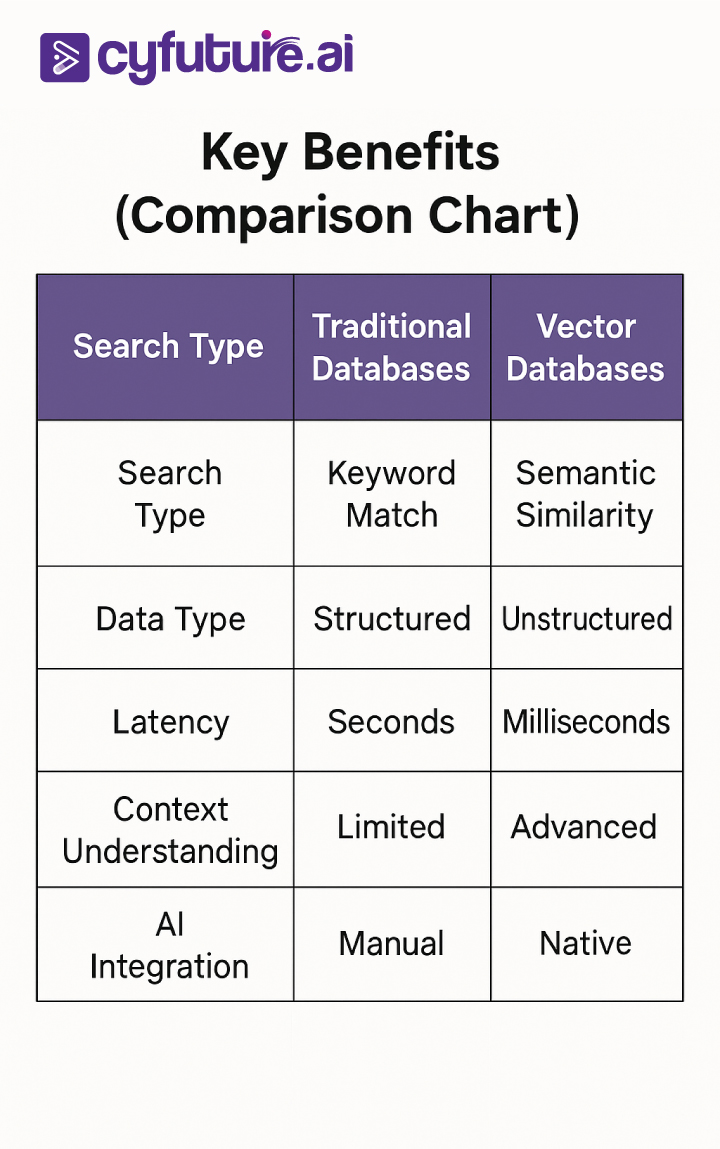

Vector databases are specialized data management systems designed to store, index, and retrieve high-dimensional vector embeddings—numerical representations of unstructured data like text, images, and audio. Unlike traditional relational databases that organize information in rows and columns, vector databases operate in multidimensional space where semantic similarity determines proximity.

Think of it this way:

In 2025, as we witness an explosion in the use of large language models, agentic AI, and Retrieval-Augmented Generation (RAG), the demand for fast, scalable, and context-aware memory systems has surged. Vector databases don't just store data—they store understanding itself.

When an AI agent needs to answer "What's the weather like today?" versus "How's the climate outside?", traditional keyword searches would fail to recognize these as similar queries. Vector databases, however, understand that these questions share semantic meaning, enabling more intelligent retrieval.

The Architecture of AI Agent Memory Systems

How Vector Embeddings Power Semantic Understanding

At the core of vector databases lies the embedding model—a neural network that converts words, sentences, or entire documents into dense numerical vectors, typically containing hundreds or thousands of dimensions. These vectors capture semantic relationships: words with similar meanings cluster together in vector space.

Here's what makes this revolutionary:

Vector databases have their roots in similarity search, a core concept that uses multidimensional data to provide context about subject matter. A vector is a numerical value that represents key features of unstructured data such as text, images or audio. The closer two vectors are to each other in space, the more likely the data they represent are related.

For example, the vectors for "dog," "puppy," and "canine" would cluster closely together, while "cat" would be nearby but slightly distant, and "automobile" would be far away in the vector space.

The RAG Architecture: Connecting Knowledge to Generation

Retrieval-Augmented Generation (RAG) has become the standard architecture for building intelligent AI agents. The global retrieval augmented generation market size was estimated at $1.2 billion in 2024 and is projected to reach $11.0 Billion by 2030, growing at a CAGR of 49.1%.

And here's the kicker:

RAG works by combining three essential components:

- Retrieval Engine: Searches vector databases to find semantically relevant information based on user queries

- Embedding Model: Converts queries and stored data into comparable vector representations

- Generator (LLM): Synthesizes retrieved information with its pre-trained knowledge to create accurate, context-aware responses

When a user asks a legal question, the system converts that query into a vector, then reaches into a vector database, pulls out the five most relevant documents, and feeds them to the LLM for a contextual, grounded response. The AI now knows your case history, your specific clauses, and your preferred terminology — because it has retrieval.

Why AI Agents Need Long-Term Memory

The Context Window Challenge

LLMs are limited by context windows, essentially the model's "short-term memory," or how much context it can consider to generate coherent responses. It needs to search and retrieve small, relevant chunks of data when a query is made.

But wait—there's more.

Even the most advanced language models with massive context windows face a fundamental problem: they can't truly "learn" from interactions without retraining. Every conversation starts fresh unless there's an external memory system.

OpenAI introduced memory in ChatGPT for Pro users in 2024. The feature allows the assistant to retain personalized contexts such as name, tone preferences, and prior instructions across sessions. This represents a paradigm shift from stateless AI to persistent, context-aware agents.

The Performance Impact of Specialized Memory Systems

The numbers don't lie.

Zep's specialized memory system scored 18% higher while using only 1/10th of the processing time and 1/100th of the context tokens compared to the "dump everything in context" approach. This dramatic efficiency gain translates directly to:

- Faster response times: Users get answers in milliseconds instead of seconds

- Lower operational costs: Using 99% fewer tokens means significant cost savings

- Higher accuracy: More relevant context leads to better responses

Real-World Applications Transforming Industries

Healthcare: Precision Medicine Through Contextual Memory

A recent study in npj Health Systems (2025) discusses how RAG-powered AI transforms healthcare by integrating real-time diagnostic data, drug interactions, and the latest clinical research, ensuring medical decisions are based on current information.

Consider this scenario:

A physician asks an AI agent about drug interactions for a patient with multiple conditions. Without vector database-powered memory, the AI might provide generic information based on outdated training data. With RAG and vector search, the system retrieves the latest clinical guidelines, patient-specific history, and recent research papers—all in milliseconds.

Financial Services: Real-Time Market Intelligence

Financial markets change by the second, so static AI models are unreliable at best. Banks and investment companies have adopted RAG-enhanced AI analysts that retrieve data from live market reports, earnings transcripts, and macroeconomic trends before creating responses and decisions.

This capability enables:

- Instant analysis of breaking financial news

- Real-time risk assessment based on current market conditions

- Personalized investment recommendations that adapt to portfolio changes

E-Commerce: Hyper-Personalized Shopping Experiences

A Forbes (2025) report revealed that a leading online retailer saw a 25% increase in customer engagement after implementing RAG-driven search and product recommendations.

Now here's where it gets interesting:

Vector databases enable AI shopping assistants to understand user preferences not just from explicit searches but from behavioral patterns—what users browse, how long they view products, what they add to carts but don't purchase. This semantic understanding creates truly personalized experiences.

Cyfuture AI: Powering Next-Generation AI Infrastructure

At Cyfuture AI, we're at the forefront of this transformation, providing enterprise-grade AI infrastructure that seamlessly integrates vector database solutions with cutting-edge LLMs. Our platform enables businesses to deploy RAG-powered AI agents that deliver unparalleled accuracy and contextual understanding.

With Cyfuture AI's managed services, organizations can:

- Deploy production-ready vector search in hours, not months

- Scale from prototype to millions of vectors seamlessly

- Ensure data security with enterprise-grade compliance features

Technical Implementation: Building AI Agents with Vector Memory

Choosing the Right Vector Database

The landscape is crowded, but here's what matters:

In 2025, choosing the right vector database can mean milliseconds of advantage. Key considerations include: RAG as a production safety net to cut hallucinations, multimodal capabilities for images and audio, sub-second latency for user experience, and serverless pricing with compression techniques like scalar quantization.

Top Contenders for 2025:

- Pinecone: Serverless indexes with per-request pricing (~$25/mo base) and single-region latency ≈ 50-100 ms p95, offering zero-ops scaling

- Milvus: Open-source powerhouse for on-premise deployments

- Weaviate: GraphQL-based with hybrid search capabilities

- Qdrant: Rust-based performance with 4-8× memory savings through quantization

The RAG Pipeline Architecture

Here's the step-by-step breakdown:

Ingestion Phase (Offline):

- Extract raw documents from data sources

- Clean content to remove noise and irrelevant information

- Chunk documents into embedding model context windows

- Deduplicate to ensure efficiency

- Generate embeddings using chosen model

- Load into vector database with indexed attributes

Retrieval Phase (Real-time):

- Convert user query to vector embedding

- Execute similarity search across vector database

- Retrieve top-K most relevant document chunks

- Pass context to LLM as part of enhanced prompt

- Generate accurate, grounded response

Advanced Memory Architectures

Mem0 distinguishes itself through a unified hybrid-memory architecture and open-source approach. By natively combining graph, vector, and key-value stores, it handles both semantic and symbolic aspects of memory out-of-the-box.

This hybrid approach enables:

- Semantic Memory: Understanding concepts and relationships

- Episodic Memory: Remembering specific events and interactions

- Procedural Memory: Encoded workflows and decision trees

Market Dynamics and Industry Adoption

Explosive Market Growth

The statistics are staggering:

- Vector Database Market was valued at $1.6 billion in 2023 and is projected to reach $10.6 billion by 2032, expanding at a CAGR of 23.54%

- Natural Language Processing (NLP) segment accounted for a market share of around 45% in 2024

- U.S. vector database market accounted for 81% of the revenue share in 2024

Industry Segment Analysis

IT & ITeS segment dominated the market and accounted for significant revenue share in 2023, due to AI-centric business models, cloud integration, and high-speed data retrieval.

But look at this trajectory:

The Retail & E-Commerce segment is projected to register the highest compound annual growth rate during the forecast period, as businesses are utilizing vector databases to provide customized shopping experiences, personalize recommendation engines, and analyze shopper trends.

Regional Growth Patterns

Asia-Pacific is expected to register the fastest CAGR during the forecast period, with rapid advancement in AI, immediate transformation, higher e-commerce penetration, and government plans. Countries such as China, India, and Japan are also pulling in investment in vector database technologies in industries like finance, healthcare, and smart cities.

Challenges and Solutions

Data Quality and Hallucination Prevention

The biggest challenge?

Integration of retrieval confidence scoring into the generation process. By assigning confidence levels to retrieved documents, models can prioritize high-relevance data while filtering out noise. In medical diagnostics, this approach has reduced irrelevant retrievals by 20%, leading to more accurate AI-assisted recommendations.

Privacy and Security Considerations

Enterprise adoption hinges on addressing privacy concerns. A 2023 case study in healthcare RAG systems demonstrated that limiting patient data to non-identifiable attributes still enabled accurate clinical recommendations.

Key privacy strategies include:

- Data minimization: Collecting only necessary information

- Differential privacy: Adding statistical noise to obscure individual data

- Anonymization: Removing personally identifiable information during retrieval

Cost Optimization

Cost-effective implementation requires:

- Efficient chunking strategies to minimize storage

- Smart caching to reduce redundant queries

- Compression techniques like scalar quantization

- Serverless architectures that scale with demand

The Future of AI Agent Memory

Emerging Trends for 2025 and Beyond

Advanced RAG systems in 2025 incorporate GraphRAG combining vector search with structured taxonomies and ontologies to bring context and logic into the retrieval process. Using knowledge graphs to interpret relationships between terms has paved the way for deterministic AI accuracy – boosting search precision to as high as 99%.

What's on the horizon:

- Multimodal RAG: Cross-modal retrieval is gaining traction, enabling RAG models to retrieve and generate across text, images, and even audio

- Real-Time RAG: Dynamic data retrieval from live feeds

- Agentic RAG: AI agents that autonomously decide when to retrieve information

- On-Device Vector Search: Privacy-preserving edge AI capabilities

The Agent Ecosystem

Gartner recently predicted that by 2028, 33% of enterprise software applications will include agentic AI, up from less than 1% in 2024, enabling 15% of day-to-day work decisions to be made autonomously.

Read More: How AI Vector Databases Power Semantic Search and Chatbots?

This prediction represents a fundamental shift in enterprise computing. We're moving from:

- Stateless tools → Persistent, context-aware agents

- Reactive systems → Proactive assistants

- Task execution → Strategic decision-making

Cyfuture AI: Building the Future Today

Cyfuture AI is pioneering the next generation of AI infrastructure, enabling enterprises to harness the full potential of vector databases and RAG architectures. Our platform provides the scalability, security, and performance needed for production AI deployments that deliver real business value.

Unlock Intelligent AI Agents with Cyfuture AI

The era of stateless AI is over.

Vector databases have fundamentally transformed how AI agents operate, enabling them to maintain context, learn from interactions, and deliver increasingly accurate responses. As we've explored, this isn't just technological advancement—it's a revolution in how machines understand and interact with human knowledge.

The market trajectory speaks volumes: from $2.2 billion in 2024 to projections exceeding $13 billion by 2034, vector databases are becoming essential infrastructure for any serious AI deployment. Organizations that embrace this technology today will have a significant competitive advantage tomorrow.

Whether you're building healthcare diagnostics systems, financial analytics platforms, e-commerce recommendation engines, or customer service agents, vector databases provide the memory layer that transforms basic AI into truly intelligent assistants.

Ready to transform your AI capabilities?

Partner with Cyfuture AI to implement production-ready vector search and RAG architectures that deliver measurable business results. Our enterprise-grade platform combines cutting-edge technology with expert support to accelerate your AI journey.

The future of AI is contextual. The future is now.

Frequently Asked Questions (FAQs)

1. What exactly is a vector database and how does it differ from traditional databases?

A vector database is a specialized data management system designed to store, index, and query high-dimensional vector embeddings—numerical representations of unstructured data like text, images, and audio. Unlike traditional relational databases that use exact matches and structured queries, vector databases perform similarity searches based on semantic meaning, enabling AI systems to find contextually relevant information even when exact keywords don't match.

2. How do vector databases improve AI agent performance?

Vector databases dramatically improve AI agent performance by providing semantic memory that persists across interactions. They enable agents to retrieve relevant context in milliseconds, reduce hallucinations by grounding responses in factual data, use 99% fewer tokens compared to dumping everything in context, and maintain personalized understanding of user preferences over time.

3. What is RAG (Retrieval-Augmented Generation) and why is it important?

RAG is an architectural pattern that combines information retrieval with text generation. When a user asks a question, the system first retrieves relevant documents from a vector database, then feeds this context to an LLM to generate an accurate response. RAG is crucial because it allows AI models to access current information without retraining, reduces hallucinations, provides source attribution for transparency, and enables customization with proprietary enterprise data.

4. Which industries benefit most from vector database implementations?

Multiple industries are seeing transformative results from vector databases. Healthcare organizations use them for clinical decision support and drug interaction analysis. Financial services leverage them for real-time market analysis and risk assessment. E-commerce platforms implement them for personalized recommendations and semantic product search. Legal firms deploy them for case law research and contract analysis. Customer service operations utilize them for intelligent ticket routing and support automation.

5. What are the key challenges in implementing vector database systems?

The main challenges include ensuring high-quality data preprocessing and chunking strategies, managing computational costs for large-scale deployments, balancing retrieval precision with system latency, maintaining data privacy and security compliance, and integrating seamlessly with existing tech stacks and workflows.

6. How much does it cost to implement vector database solutions?

Costs vary significantly based on scale and deployment model. Cloud-managed solutions like Pinecone start around $25/month for small projects with free tiers available. Open-source options (Milvus, Weaviate) offer free software but require infrastructure and engineering resources. Enterprise deployments typically range from thousands to tens of thousands monthly depending on query volume, storage requirements, and support needs.

7. What's the difference between vector databases and traditional search engines?

Traditional search engines rely on keyword matching and inverted indexes, requiring exact or close text matches. Vector databases understand semantic meaning and context, finding conceptually similar content even with completely different wording. They excel at handling unstructured data like images and audio, support similarity-based retrieval across different data types, and provide more nuanced relevance ranking based on semantic proximity.

8. How do I choose the right vector database for my project?

Selection depends on several factors. For scale requirements, consider whether you need millions or billions of vectors. For deployment preferences, decide between managed cloud services or self-hosted solutions. Performance needs include latency requirements and query throughput. Feature requirements encompass hybrid search capabilities, metadata filtering, and multimodal support. Budget constraints involve balancing operational costs with engineering resources.

9. What does the future hold for vector databases and AI agents?

The future includes several emerging trends. GraphRAG combines vector search with knowledge graphs for 99% accuracy. Multimodal capabilities extend beyond text to images, audio, and video. Real-time RAG integrates live data feeds for up-to-the-second information. Agentic architectures feature autonomous decision-making about when to retrieve information. Edge deployment brings vector search to local devices for privacy and speed.

Author Bio:

Meghali is a tech-savvy content writer with expertise in AI, Cloud Computing, App Development, and Emerging Technologies. She excels at translating complex technical concepts into clear, engaging, and actionable content for developers, businesses, and tech enthusiasts. Meghali is passionate about helping readers stay informed and make the most of cutting-edge digital solutions.