The NVIDIA H100 GPU has become the global gold standard for training and deploying large language models (LLMs), generative AI systems, HPC workloads, and scientific computing. With India witnessing rapid AI adoption across enterprises, startups, and research institutions, demand for the H100 has surged sharply - and so have questions about its exact pricing, availability, and performance advantages over previous-generation GPUs like the A100.

This updated guide breaks down the NVIDIA H100 GPU price in India, compares PCIe vs SXM variants, explains why pricing varies, includes benchmark insights, and offers practical advice on choosing the right H100 configuration for your AI workloads.

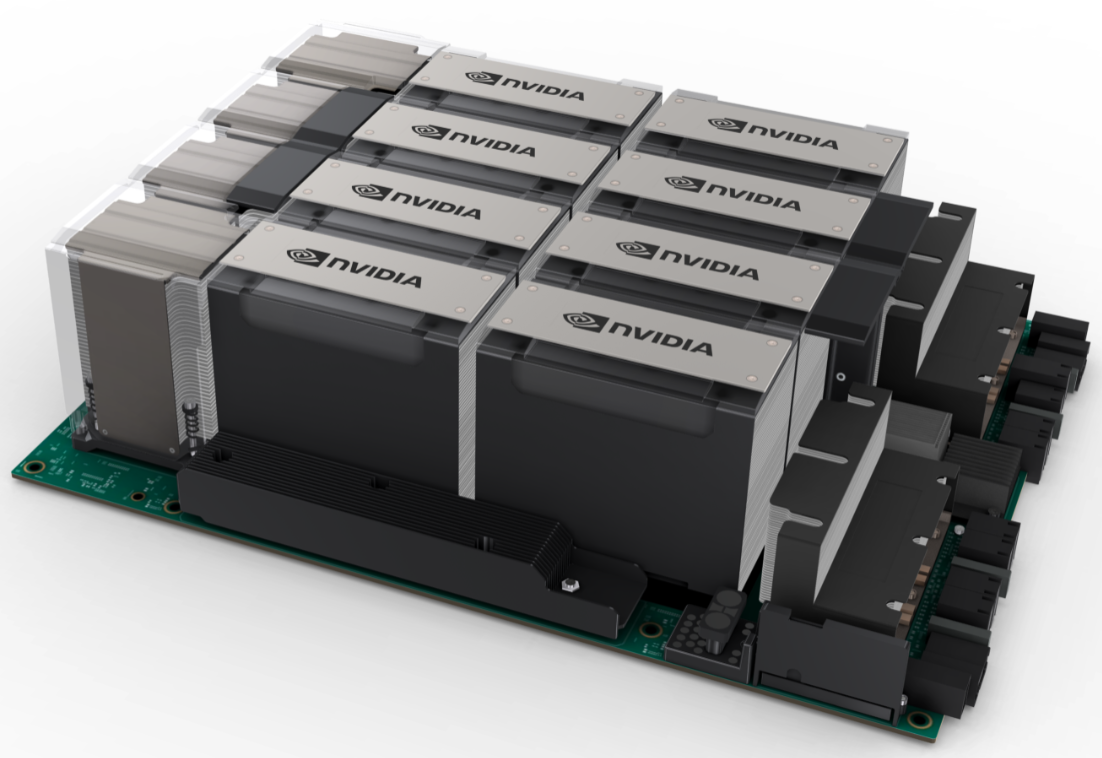

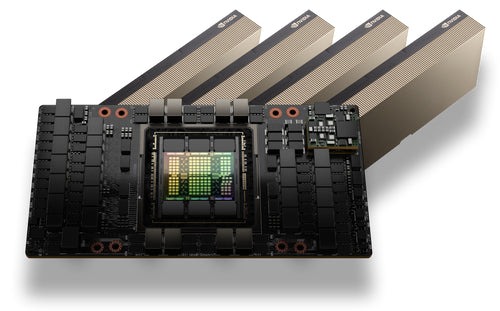

What is the NVIDIA H100 GPU?

The NVIDIA H100 GPU (Hopper architecture) is the world’s most advanced AI GPU, built for:

- Large-scale LLM training (GPT, Llama, Mixtral, Claude)

- AI inference at massive throughput

- HPC workloads such as genomics, weather modeling, simulations

- Enterprise AI compute clusters

- Multi-GPU servers with 3–4× faster interconnect than A100

Key innovations include:

- Transformer Engine for accelerated LLM workloads

- FP8 precision support for higher throughput

- Fourth-gen Tensor Cores

- NVLink 4.0 for high-bandwidth multi-GPU scaling

- Up to 4× faster training and 30× faster inference vs A100

NVIDIA H100 GPU Price in India (2026)

The price of NVIDIA H100 GPUs in India depends on:

- Configuration (PCIe vs SXM)

- Import duties & customs

- Global supply fluctuations

- Vendor/distributor margins

- Availability in the Indian channel

Current H100 GPU Price Range in India (Updated 2026)

|

H100 Variant |

Memory |

Form Factor |

Price in India (Approx.) |

Best Use Cases |

|

NVIDIA H100 PCIe |

80GB |

PCIe |

₹40–45 Lakhs |

AI inference, mid-scale training, cloud nodes |

|

NVIDIA H100 SXM |

80GB |

SXM (NVLink) |

₹50–60 Lakhs |

Large-scale LLM training, GPU clusters, HPC |

|

NVIDIA H100 SXM (High Availability Regions) |

80GB |

SXM |

₹55–65 Lakhs |

Time-sensitive workloads with priority supply |

|

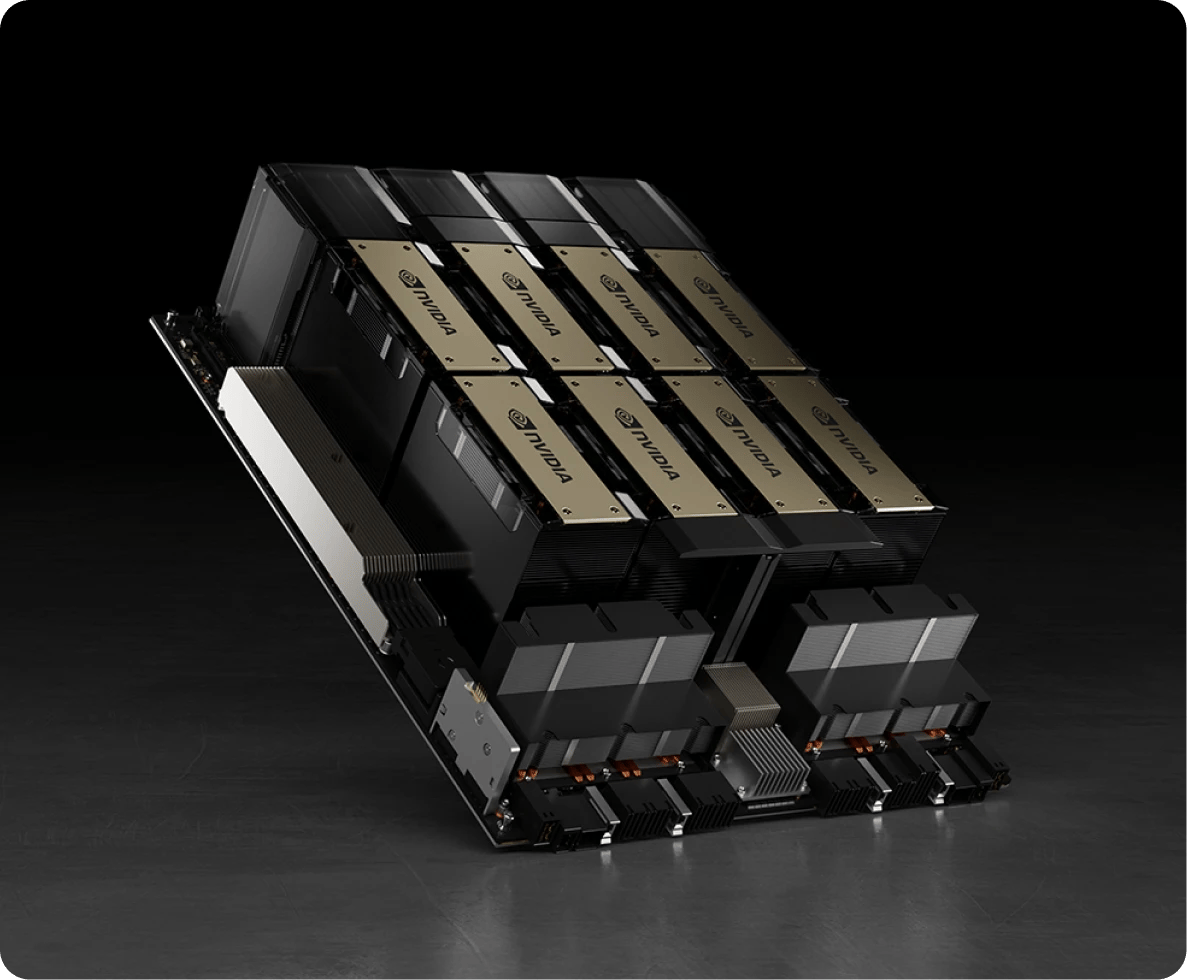

Multi-GPU H100 Servers (8×) |

80GB |

Rack server |

₹2.2–5.5 Crores |

Enterprise AI training clusters |

Note: Prices fluctuate weekly due to global demand spikes and import timelines.

H100 PCIe vs H100 SXM: Which Should You Buy?

Choosing the wrong variant can waste crores and months of engineering time. Here’s a clear breakdown:

1. H100 PCIe (80GB)

|

Feature |

Details |

|

Memory |

80GB HBM3 |

|

Interface |

PCIe |

|

Price in India |

₹40–45 Lakhs |

|

Best For |

AI inference, mid-scale model training, enterprise AI workloads, cloud GPU nodes |

|

Advantage |

Easier integration into existing PCIe servers, cost-effective for scaling inference clusters |

Pros

✔ Lower cost

✔ Fits into standard enterprise servers

✔ Ideal for fine-tuning smaller models

Cons

❌ Slower interconnect vs SXM

❌ Not suitable for large multi-GPU training workloads

2️⃣ H100 SXM (80 GB, NVLink)

|

Feature |

Details |

|

Memory |

80GB HBM3 |

|

Interface |

SXM (NVLink) |

|

Price in India |

₹50–60 Lakhs |

|

Best For |

Large-scale AI training, LLM training, multi-GPU clusters, high-performance computing |

|

Advantage |

Higher bandwidth, better inter-GPU communication, ideal for distributed AI training |

Pros

✔ Best performance for LLMs

✔ Large-scale training optimized

✔ Higher memory bandwidth

Cons

❌ Expensive

❌ Requires SXM-compatible servers

Why Is the H100 GPU So Expensive in India?

1. Import Duties & Taxes

India places:

- ~22–28% duty on AI compute hardware

- GST & logistic overhead

2. Global GPU Shortage

AI labs and enterprises worldwide are hoarding H100s.

3. Incredible Compute Performance

The H100 isn't a consumer GPU — it's a data-center-grade accelerator.

4. Limited Channel Distribution

Only authorized enterprise distributors are allowed to sell H100 units.

NVIDIA H100 GPU Benchmarks (Training & Inference)

LLM Training Performance

H100 delivers:

- Up to 4× faster training vs A100

- Massive gains in FP8, BF16, and Tensor Core operations

Inference Performance

- Up to 30× higher throughput in transformer-based inference

- Large ops/sec improvement for 7B, 13B, 70B, and 180B models

Example Workload:

Training Llama 70B requires:

|

GPU |

Time to Train (Relative) |

Cost Efficiency |

|

A100 |

1× |

Moderate |

|

H100 |

0.25× (4x faster) |

Highest |

H100 vs A100: Should You Upgrade?

|

Feature |

A100 |

H100 |

|

Architecture |

Ampere |

Hopper |

|

FP8 Support |

❌ |

✔ Yes |

|

NVLink |

Gen 3 |

Gen 4 |

|

Training Speed |

Baseline |

+4× Faster |

|

Inference |

Moderate |

+30× Faster |

|

Suitability |

2020-22 workloads |

2023–2030 workloads |

💡 If you work with LLMs > 13B parameters, the H100 is a necessity — not a luxury.

Where to Buy NVIDIA H100 GPUs in India?

Most Indian enterprises purchase via:

- OEM server providers

- Enterprise-grade GPU cloud providers

- Official NVIDIA resellers

Deploy H100 GPUs Instantly with Cyfuture AI

If acquiring physical H100 GPUs involves long procurement cycles, Cyfuture AI offers:

- On-demand H100 GPU instances

- Secure enterprise-grade environments

- PCIe & SXM configurations

- Support for LLM training, fine-tuning, and generative AI workloads

- Dedicated cluster setups for enterprises

For current availability, pricing, or private clusters, you can contact our infrastructure team anytime.

NVIDIA H100 GPU Specifications (Detailed)

|

Specification |

Value |

|

GPU Architecture |

NVIDIA Hopper |

|

Memory |

80GB HBM3 |

|

Memory Bandwidth |

3.35 TB/s |

|

FP8 Performance |

4,000+ TFLOPS |

|

NVLink |

900 GB/s (SXM) |

|

TDP |

350W (PCIe) / 700W (SXM) |

|

Process Node |

4nm |

Price Trends: Why H100 Costs Change Monthly

Factors affecting pricing:

- Global supply chain

- NVIDIA allocation to India

- High demand from LLM companies

- Silicon shortages

- Import delays

Expect a ±10–15% change every quarter.

Best Use Cases for the H100 GPU

✔ Training LLMs (Llama-3, GPT-style models)

✔ Generative AI model inference

✔ Advanced recommendation engines

✔ Multimodal AI workloads

✔ High-performance computing (HPC)

✔ AI research labs & universities

Read More: https://cyfuture.ai/blog/nvidia-h200-gpu-price-in-india-2026-specs-availability-and-performance

Conclusion

The NVIDIA H100 GPU is the fastest and most powerful AI accelerator available today, purpose-built for LLM and generative AI workloads. In India, prices range from ₹40–60 Lakhs, depending on configuration and availability.

If you’re building:

- AI products

- LLM infrastructure

- Model training pipelines

- HPC workloads

…the H100 is the industry’s best choice.

For organizations looking for instant deployment without waiting months for hardware procurement, Cyfuture AI offers H100-powered clusters designed specifically for enterprise-scale AI.

FAQs: NVIDIA H100 GPU Price in India

1. What is the price of H100 in India?

Between ₹40–60 Lakhs depending on variant and availability.

2. Why is the H100 so expensive?

Enterprise-grade design, short supply, and import duties.

3. Can normal consumers buy the H100 GPU?

Typically no—it is a data center GPU requiring specialized servers.

4. How many H100s are needed to train an LLM?

A 70B model often needs 100–200 H100 SXM GPUs for efficient training.

5. Is it better to rent than buy H100 GPUs?

If you need elasticity or temporary access, renting through GPU cloud providers is more cost-efficient.