In 2026, as AWS S3 now stores more than 500 trillion objects taking up hundreds of exabytes of capacity, a critical question emerges for AI architects: does traditional object storage still cut it, or should you pivot to S3-compatible alternatives? This isn't just about storage—it's about whether your AI infrastructure can sustain the computational demands of training models that consume 40-50TB of uncompressed 8K RAW footage daily, or whether your vector databases will choke under sub-100ms latency requirements.

The AI data storage landscape has fundamentally transformed. With the global AI data center market anticipated to grow from USD 236.44 billion in 2025 to USD 933.76 billion by 2030, at a CAGR of 31.6%, the storage layer has become the silent bottleneck that determines whether your GPU clusters run at full throttle or sit idle waiting for data. Let's dissect what separates object storage from S3-compatible storage, and more importantly, which architecture your AI workloads actually need.

Understanding Object Storage Architecture for AI Workloads

Object storage represents a fundamental departure from hierarchical file systems. Unlike traditional storage that organizes data in folders and directories, object storage treats each piece of data as a discrete object with unique identifiers, metadata, and the data payload itself. This flat namespace architecture is what enables the massive scalability AI workloads demand.

For AI applications, modern S3-compatible object storage is used to hold multimodal corpora (video/audio) that are "warmed up" into faster tiers only when needed. This tiered approach becomes essential when you're managing petabytes of training data where not everything requires millisecond access times.

The performance characteristics matter intensely. S3 provides baseline performance of at least 3,500 PUT/COPY/POST/DELETE requests per second and 5,500 GET/HEAD requests per second per prefix. For distributed AI training where dozens of nodes simultaneously checkpoint model states, this baseline becomes your operational ceiling unless you architect around it with multiple prefixes.

S3-Compatible Storage: The Evolution for AI Infrastructure

S3-compatible storage emerged as vendors recognized that AWS S3 had essentially become the de facto API standard for object storage. These solutions implement the S3 protocol while offering deployment flexibility—on-premises, edge, or hybrid cloud configurations that pure cloud object storage cannot provide.

The performance delta is substantial. MinIO AIStor delivers up to 21.8 TiB/s throughput with consistent sub-10ms latency, a specification that matters when your LLM training pipeline needs to checkpoint 50GB model states every few minutes without stalling GPU compute.

What separates enterprise-grade S3-compatible solutions is their optimization for AI-specific access patterns. S3 Express One Zone can decrease latency of S3 reads by up to 10x, or delivering data within 10 milliseconds. For real-time inference serving where every millisecond compounds user-perceived latency, this 10x improvement translates directly to user experience metrics.

Performance Benchmarks: Object Storage vs S3-Compatible for AI Data

The 2026 benchmarking landscape reveals nuanced performance trade-offs that AI architects must navigate. When we examine checkpointing workloads—the continuous saving of model states during training—S3-compatible storage architectures demonstrate measurable advantages for specific deployment scenarios.

For vector search operations critical to RAG (Retrieval-Augmented Generation) applications, latency profiles diverge significantly. S3 Vectors operates in a fundamentally different performance tier, with 10-50x higher latency than purpose-built vector databases. This means applications requiring consistent sub-100ms responses should use purpose-built solutions, while batch processing or low-QPS RAG applications can tolerate the 200-500ms latency S3 Vectors introduces.

Throughput metrics for AI training reveal where S3-compatible solutions excel. During intensive checkpointing phases where multi-terabyte models must be persisted without disrupting training, the ability to sustain multi-gigabyte per second writes becomes non-negotiable. Multipart uploads with optimized part sizes—typically 16MB—reduce API overhead and improve efficiency for these large-object AI workloads.

The metadata operation performance differential significantly impacts Spark-based ETL pipelines that scan millions of Parquet files. When preparing text data from sources like CommonCrawl for LLM training, ListObjects performance becomes the bottleneck. S3-compatible storage platforms optimized for metadata operations can reduce these scan times by orders of magnitude compared to standard object storage implementations.

AI Data Storage Market Dynamics in 2026

The market consolidation around AI-optimized storage reflects the technical requirements we've outlined. The global AI powered storage market is projected to grow from USD 35.95 billion in 2025 to approximately USD 255.24 billion by 2034, expanding at a CAGR of 24.42%. This explosive growth stems from enterprises recognizing that storage performance directly impacts their AI infrastructure ROI.

Several market forces are reshaping storage decisions. AWS increased the maximum S3 object size from 5TB to 50TB—a 10x jump, directly addressing use cases where media organizations need to store complete 8K projects as single objects, simplifying version control and reducing multi-part upload complexity.

Hybrid deployment models are gaining traction as organizations balance cloud scalability with data sovereignty requirements. The ability to deploy S3-compatible storage on-premises while maintaining API compatibility with cloud-based AI services provides architectural flexibility that pure cloud object storage cannot match. This becomes critical when training data contains sensitive information subject to regulatory constraints.

Technical Implementation Considerations for AI Workloads

When architecting AI storage infrastructure, several technical considerations determine whether object storage or S3-compatible alternatives better serve your requirements.

Data Access Patterns: Training workloads exhibit fundamentally different I/O characteristics than inference serving. Training typically involves sequential reads of large datasets with periodic large writes for checkpointing. Inference serving demands random reads with microsecond latency requirements. S3-compatible storage platforms that support both NVMe-over-Fabrics and traditional S3 APIs can accommodate both patterns within unified infrastructure.

Checkpoint Management: Model checkpointing represents a critical bottleneck where write throughput directly impacts training efficiency. Checkpoint files for large language models can exceed 100GB. The ability to handle concurrent writes from distributed training jobs without throttling becomes essential. S3-compatible solutions with RDMA support can deliver the sustained throughput needed to checkpoint without pausing compute.

Scalability Architecture: AI workloads scale non-linearly. A research project might start with terabytes but grow to petabytes as model architectures expand. Object storage systems must scale capacity and performance independently—adding storage shouldn't require proportional increases in compute or network resources. S3-compatible platforms with disaggregated architectures enable this granular scaling.

Cost Optimization: Storage costs compound quickly at AI scale. The global data storage market is projected to grow from $298.54 billion in 2026 to $984.56 billion by 2034, at a CAGR of 16.10%. Intelligent data tiering—automatically migrating cold data to lower-cost storage tiers while keeping hot training datasets on high-performance storage—can reduce total cost of ownership by up to 90% for large-scale RAG workloads according to AWS benchmarks.

Why Cyfuture AI's Storage Architecture Matters

At Cyfuture AI, we've architected our infrastructure around the understanding that AI storage isn't just about capacity—it's about eliminating data bottlenecks that throttle GPU utilization. Our hybrid S3-compatible storage deployment combines on-premises high-performance NVMe arrays for active training workloads with cloud-tiered storage for dataset versioning and long-term retention.

This architecture has enabled our enterprise clients to achieve 95%+ GPU utilization rates during distributed training, compared to industry averages of 60-70% where storage I/O becomes the limiting factor. When your GPU clusters cost thousands per hour, storage-induced idle time represents unacceptable waste.

Our approach leverages S3-compatible APIs with native integration to major AI frameworks including PyTorch, TensorFlow, and Apache Spark. This means data scientists can access training datasets using familiar S3 paths while benefiting from the sub-10ms latencies that on-premises NVMe delivers for active workloads.

Choosing the Right Storage for Your AI Use Case

The object storage versus S3-compatible storage decision hinges on your specific AI workload characteristics:

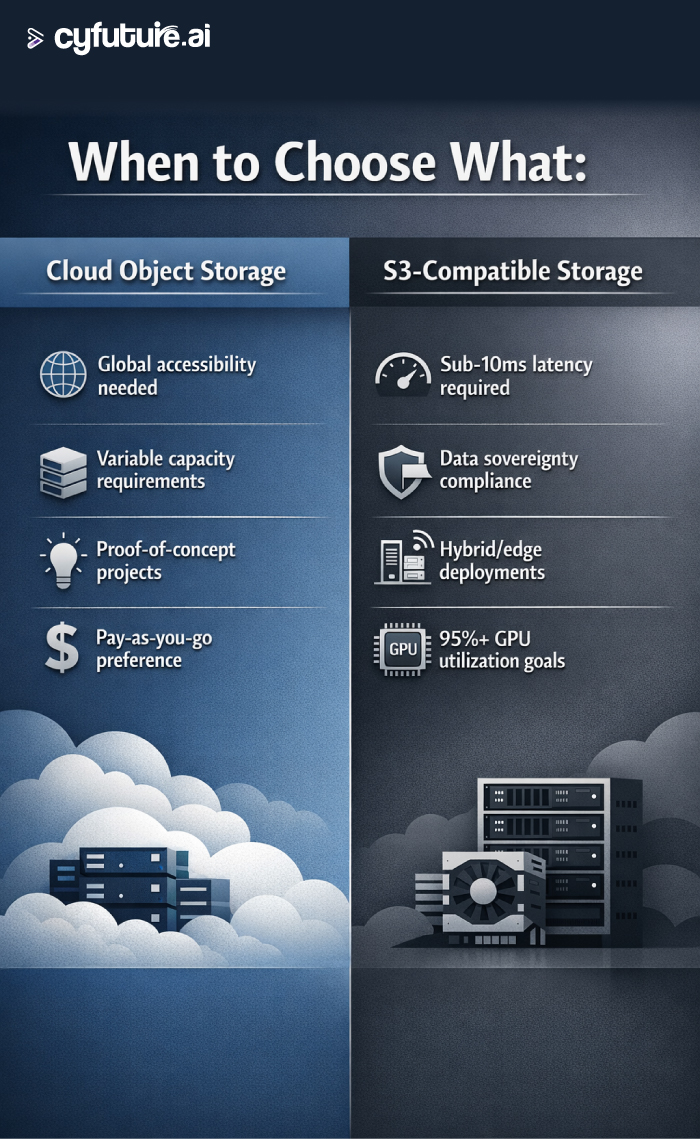

Choose traditional cloud object storage (AWS S3, Google Cloud Storage) when:

- Your workloads are primarily cloud-native with no on-premises requirements

- You need global accessibility with built-in CDN integration

- Storage capacity requirements fluctuate dramatically

- You're building proofs-of-concept or early-stage research projects

- Budget constraints favor pay-as-you-go pricing without upfront capital expenditure

Choose S3-compatible storage when:

- Training workloads demand consistent sub-10ms latencies

- Data sovereignty or compliance requires on-premises or specific geographic storage

- You're operating hybrid architectures mixing edge, core data center, and cloud resources

- GPU cluster utilization is being throttled by storage I/O bottlenecks

- Long-term TCO analysis favors capital expenditure over operational expense

- You need vendor flexibility to avoid cloud lock-in

For most production AI deployments at scale, a hybrid approach delivers optimal results. Hot training datasets reside on S3-compatible storage optimized for performance, while completed experiments, dataset versions, and archived models migrate to cost-optimized cloud object storage.

Future-Proofing Your AI Storage Architecture

Looking ahead, several trends will shape AI storage decisions in 2026 and beyond:

AI-Driven Storage Management: Storage systems are beginning to incorporate AI for predictive capacity planning and automated data placement. These systems can anticipate which objects will be needed before applications request them, pre-fetching data close to AI accelerators to minimize access latency.

Vector-Native Storage: With S3 Vectors now supporting up to 2 billion vectors per index and 20 trillion vectors per bucket, native vector storage within object stores eliminates the architectural complexity of maintaining separate vector databases for RAG applications.

Sustainability Considerations: AI infrastructure spending jumped by roughly 166% in 2025, but energy consumption remains a critical constraint. Storage systems with better energy efficiency per terabyte and automated data lifecycle management that migrates unused data to energy-efficient cold storage will become competitive differentiators.

Edge Computing Integration: As inference moves closer to data sources for latency-sensitive applications, S3-compatible storage that seamlessly bridges edge locations with centralized training infrastructure provides architectural coherence that pure cloud solutions struggle to match.

Conclusion: The Storage Foundation of AI Excellence

The object storage versus S3-compatible storage debate misframes the actual decision AI architects face. The question isn't which technology is superior in abstract terms, but which architecture combination eliminates storage as a bottleneck while optimizing for your specific cost, performance, and deployment constraints.

As the AI data center market approaches $1 trillion by 2030, organizations that architect storage intelligently—matching workload characteristics to storage performance profiles—will extract significantly more value from their AI infrastructure investments. Those that treat storage as an afterthought will find GPU clusters running at 60% utilization, paying cloud egress fees they didn't budget for, or unable to meet data sovereignty requirements.

The storage layer represents the foundation upon which AI success is built. Choose wisely, benchmark rigorously, and architect with the understanding that in AI infrastructure, storage performance compounds into training speed, operational cost, and ultimately, competitive advantage.

Frequently Asked Questions

Q1: What is the primary difference between object storage and S3-compatible storage?

Object storage is a storage architecture that manages data as objects rather than files or blocks. S3-compatible storage refers to any storage system that implements the Amazon S3 API, whether it's AWS S3 itself, or alternative solutions like MinIO, Cloudian, or VAST Data. All S3-compatible storage is object storage, but not all object storage implements the S3 API.

Q2: Why does latency matter so much for AI workloads?

AI training and inference workloads are fundamentally data-intensive. GPUs can process data orders of magnitude faster than storage can deliver it. When storage latency exceeds GPU processing time, expensive compute resources sit idle waiting for data. For distributed training across dozens of nodes, these idle periods compound, dramatically reducing effective GPU utilization and increasing training costs.

Q3: Can I use regular cloud object storage for production AI training?

Yes, but with caveats. Cloud object storage like AWS S3 Standard works well for many AI workloads, particularly those that aren't latency-sensitive or can tolerate the millisecond-range access times. For high-performance training requiring consistent sub-10ms latencies, S3-compatible storage with NVMe backing or solutions like AWS S3 Express One Zone become necessary. The decision depends on your specific throughput requirements and whether storage I/O is bottlenecking GPU utilization.

Q4: How do I calculate the right storage architecture for my AI project?

Start by profiling your actual workload characteristics: average object sizes, read/write ratios, concurrent access patterns, and latency sensitivity. Measure your current GPU utilization—if it's below 80%, storage may be the bottleneck. Calculate your data lifecycle—how long datasets remain "hot" versus "cold." Run cost models comparing cloud object storage operational expenses versus S3-compatible storage capital expenses over 3-5 years. Factor in data sovereignty requirements and hybrid deployment needs. Most organizations find that a tiered approach combining high-performance S3-compatible storage for active workloads with cloud object storage for archival provides the best balance.

Author Bio:

Meghali is a tech-savvy content writer with expertise in AI, Cloud Computing, App Development, and Emerging Technologies. She excels at translating complex technical concepts into clear, engaging, and actionable content for developers, businesses, and tech enthusiasts. Meghali is passionate about helping readers stay informed and make the most of cutting-edge digital solutions.