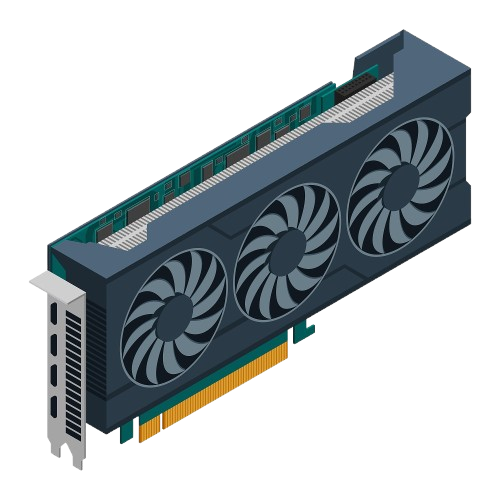

Why Choose Cyfuture AI's H200 SXM GPU Server

Experience next generation AI performance with Cyfuture AI's NVIDIA H200 SXM GPU Servers, engineered for large scale AI training, high performance computing, and enterprise grade inferencing. Powered by NVIDIA's Hopper architecture, each H200 SXM GPU delivers 141GB of HBM3e memory and 4.8TB/s bandwidth, providing up to 10X faster performance for the most demanding workloads. Whether you're scaling LLMs, running real time inference, or building generative AI applications, Cyfuture AI delivers infrastructure built for limitless innovation. With Cyfuture AI, you can seamlessly buy H200 GPU servers and GPU clusters, eliminating heavy upfront costs while benefiting from transparent, performance based pricing. Our enterprise ready infrastructure, combined with round the clock support and turnkey deployment services, ensures a smooth and reliable experience for AI teams and data scientists.

Proven Expertise

With years of experience deploying enterprise AI infrastructure, Cyfuture AI understands the unique requirements of demanding computational workloads.

White-Glove Service

From initial consultation through deployment and ongoing support, our team ensures your infrastructure delivers optimal performance.

Competitive Pricing

We offer transparent, competitive pricing on NVIDIA H200 SXM Servers without compromising on quality or support.

Flexible Deployment

Whether you need a single GPU server or a multi-node cluster, we customize solutions to match your specific requirements and growth trajectory.