Introduction: The Real Price of AI Customization

In 2026, fine-tuning large language models has become the cornerstone of enterprise AI strategy, but the financial reality often catches organizations off-guard. A recent survey by Stanford HAI reveals that 73% of companies underestimate their AI fine-tuning budgets by 40-60% in their first year. The culprit? Hidden costs in GPU compute hours, exponential storage demands, and infrastructure overhead that can transform a $50,000 project into a $300,000 commitment overnight.

For tech leaders navigating this landscape, understanding the granular economics of fine-tuning isn't optional—it's survival. Whether you're a CTO allocating Q1 budgets, a developer architecting your first custom model, or a student planning a research project, the difference between success and budget overrun lies in mastering three core cost pillars: GPU computational hours, persistent storage infrastructure, and the often-overlooked auxiliary expenses that compound both.

GPU Hours: The Heavyweight of Fine-Tuning Costs

Current GPU Pricing Landscape in 2026

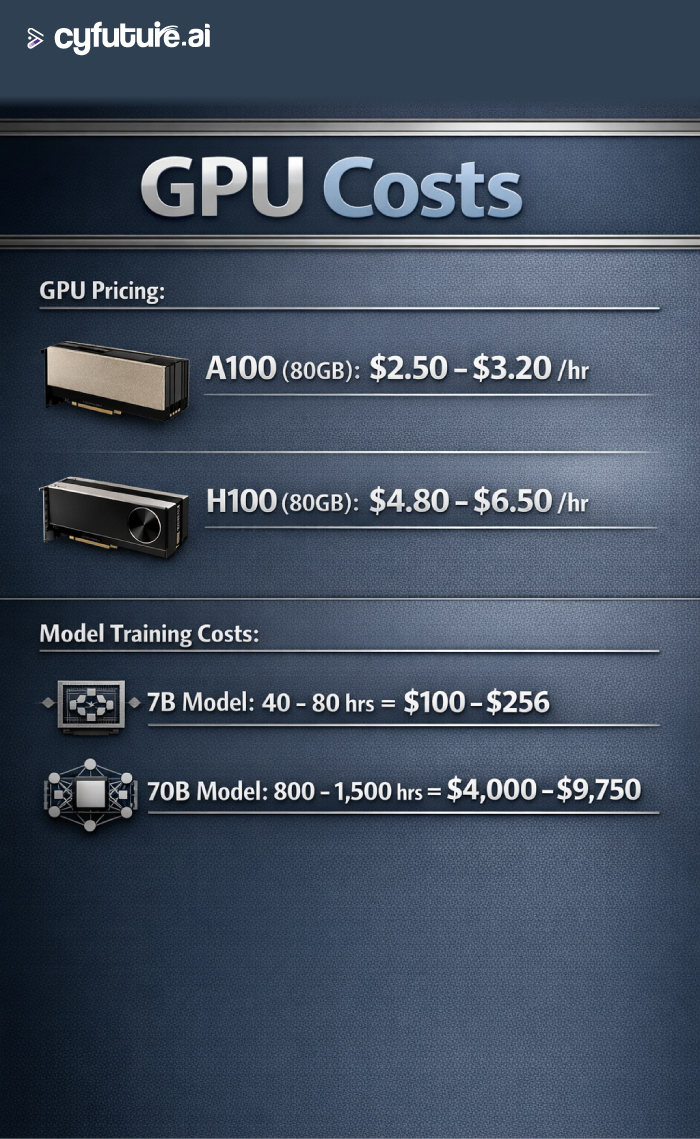

The GPU market has evolved dramatically. According to Nvidia's Q4 2025 enterprise pricing data, hourly rates for popular training configurations are:

- A100 (80GB): $2.50-$3.20/hour on major cloud platforms

- H100 (80GB): $4.80-$6.50/hour with premium tier access

- H200: $8.00-$10.50/hour for cutting-edge performance

- AMD MI300X: $3.80-$5.20/hour as a competitive alternative

Fine-tuning a 7B parameter model like Llama 3.1 typically consumes 40-80 GPU hours on A100 infrastructure, translating to $100-$256 per iteration. Scale that to a 70B parameter model, and you're looking at 800-1,500 hours—potentially $4,000-$9,750 for a single comprehensive fine-tuning run.

Multi-GPU Training Economics

Organizations pursuing distributed training face multiplicative costs. A 2026 study by MLCommons shows that fine-tuning GPT-class models with 175B parameters using 64 H100 GPUs for optimal throughput costs approximately $20,480 per 24-hour training cycle. With typical projects requiring 3-7 iterations to achieve production-ready performance, total GPU expenditure ranges from $61,440 to $143,360.

Cyfuture AI's GPU optimization framework has demonstrated 35% cost reduction for clients through intelligent batch scheduling and mixed-precision training strategies, bringing these enterprise-scale projects within reach of mid-market organizations.

Storage Costs: The Silent Budget Killer

Training Data and Checkpoint Requirements

Storage demands extend far beyond initial dataset hosting. Each fine-tuning checkpoint for a 70B model occupies approximately 140GB in standard precision (fp32) or 70GB in mixed precision (fp16/bf16). Organizations maintaining proper version control typically store:

- Original pretrained model: 140-280GB

- Training datasets: 50-500GB depending on domain

- Intermediate checkpoints (5-10 per run): 350-700GB

- Final optimized models: 140-280GB

- Metadata and logging: 10-50GB

Total storage per project: 690GB to 1.8TB

With enterprise-grade cloud storage (AWS S3, Google Cloud Storage, Azure Blob) costing $0.023-$0.036 per GB/month for standard tiers in 2026, a single fine-tuning project demands $15.87-$64.80 monthly in perpetuity—or $190-$778 annually per model variant.

The Compounding Effect

Organizations managing 10-20 model variants simultaneously face storage bills of $1,900-$15,560 annually, excluding egress fees which add 15-25% overhead when moving data between regions or downloading for inference deployment.

Total Budget Breakdown: The Complete Picture

Hidden Costs That Add Up

Beyond GPU and storage, comprehensive fine-tuning budgets must account for:

- Data preparation infrastructure: $5,000-$25,000 for preprocessing pipelines, annotation tools, and quality validation systems

- Networking costs: Data transfer between storage and compute can add $500-$2,000 per project

- Monitoring and observability: MLOps platforms cost $200-$1,500 monthly

- Human expertise: ML engineers command $150,000-$300,000 annually; allocate 20-40% FTE per active project

Real-World Budget Example: Enterprise Deployment

For a mid-sized enterprise fine-tuning a 70B model for customer service automation in 2026:

- GPU compute (5 iterations, H100): $35,000

- Storage (18 months): $1,167

- Data preparation: $15,000

- Infrastructure overhead: $3,500

- Personnel (30% of 2 engineers for 3 months): $37,500

- Total first-year cost: $92,167

Subsequent iterations drop to $40,000-$50,000 as infrastructure and expertise mature.

Cyfuture AI managed fine-tuning service reduces these costs by 45% through shared infrastructure, automated pipeline optimization, and expert-guided model selection—delivering enterprise-grade results at startup-friendly budgets.

Frequently Asked Questions

Q1: How much does it cost to fine-tune a 7B parameter model in 2026?

For a typical 7B parameter model like Llama 3.1 or Mistral, expect $100-$400 for GPU compute (40-120 hours on A100 GPUs), plus $50-$200 for storage and infrastructure. Total project costs range from $500-$2,000 including data preparation for small-scale implementations, or $5,000-$15,000 for production-grade enterprise deployments with proper validation and testing.

Q2: What's the most cost-effective GPU for fine-tuning in 2026?

The A100 (80GB) offers the best price-performance ratio at $2.50-$3.20/hour, delivering 90% of H100 performance for 50% of the cost. For budget-conscious projects under 13B parameters, AMD MI300X at $3.80-$5.20/hour provides competitive performance. H100 and H200 GPUs are justified only for 70B+ parameter models or time-critical deployments where the 2-3x speed advantage justifies premium pricing.

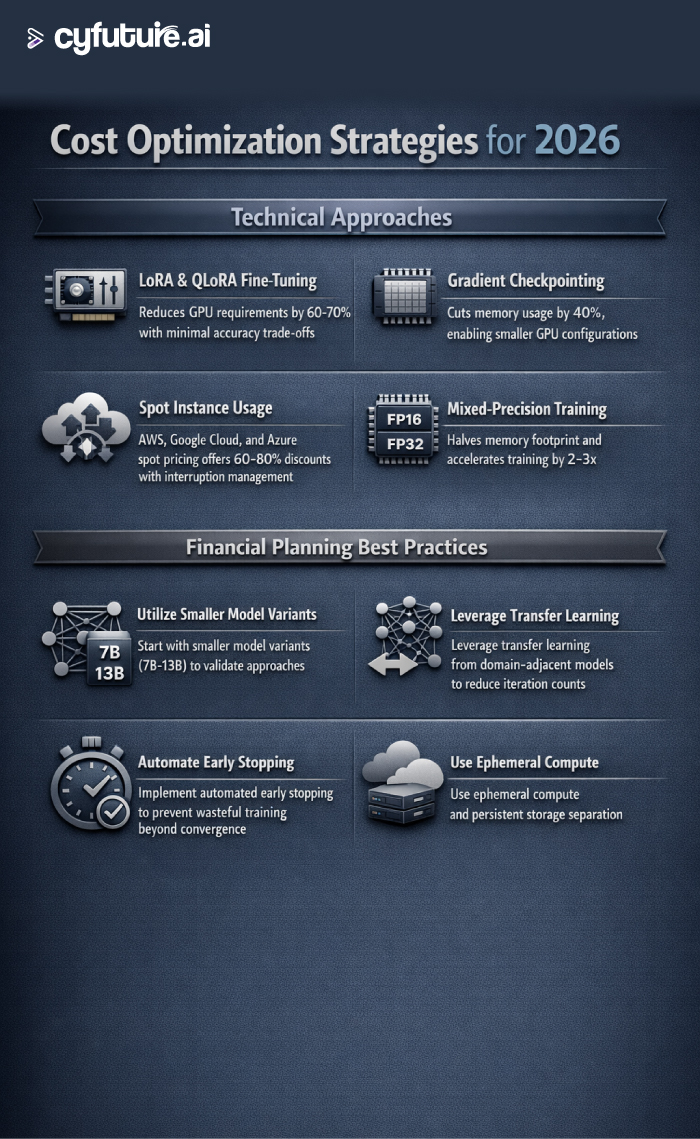

Q3: Can I reduce fine-tuning costs without sacrificing model quality?

Absolutely. Implementing LoRA (Low-Rank Adaptation) reduces GPU requirements by 60-70% while maintaining 95-98% of full fine-tuning performance. QLoRA pushes this further with 4-bit quantization, enabling 70B model fine-tuning on single consumer GPUs. Additionally, using spot instances with automatic failover can cut compute costs by 60-80%. The key is matching optimization strategy to your latency tolerance and performance requirements.

Q4: How do storage costs scale with model size and project duration?

Storage costs scale linearly with model parameters and checkpoint frequency. A 7B model with 5 checkpoints requires approximately 100GB ($2.30-$3.60/month), while a 70B model needs 1TB+ ($23-$36/month). Over 18 months, this compounds: expect $41-$65 for 7B models versus $414-$648 for 70B models. Organizations maintaining multiple variants should implement automated checkpoint pruning and archival strategies to cold storage tiers, reducing long-term costs by 40-60%.

Author Bio:

Meghali is a tech-savvy content writer with expertise in AI, Cloud Computing, App Development, and Emerging Technologies. She excels at translating complex technical concepts into clear, engaging, and actionable content for developers, businesses, and tech enthusiasts. Meghali is passionate about helping readers stay informed and make the most of cutting-edge digital solutions.