Are You Struggling to Scale Your Generative AI and Chatbot Projects?

GPU as a Service (GPUaaS) is revolutionizing how enterprises and developers build generative AI applications and intelligent chatbots by providing on-demand access to high-performance Graphics Processing Units without massive upfront infrastructure investments. This cloud-based computing model transforms GPU usage from capital expenditure to operational expenditure, enabling organizations to scale AI workloads dynamically while reducing training times by up to 77% and cutting costs by 50-70% compared to traditional on-premises deployments.

Here's the reality:

Building generative AI models and sophisticated chatbots requires immense computational power. Training a large language model can cost upwards of $100 million using traditional infrastructure. The NVIDIA H100 GPU alone can cost $25,000-$40,000 per unit, and that's before accounting for cooling systems, data center space, maintenance teams, and the 6-12 month procurement cycles.

But there's a better way.

What is GPUaaS (GPU as a Service)?

GPU as a Service (GPUaaS) is a cloud-based infrastructure model that provides on-demand access to powerful Graphics Processing Units for compute-intensive workloads without requiring physical hardware ownership. Users can rent GPU resources from cloud providers on flexible pricing models—hourly rates starting from $0.52 for high-end H100 GPUs or subscription-based plans—paying only for actual usage rather than maintaining expensive on-premises infrastructure.

Think of it this way:

Instead of purchasing a $290,000 GPU cluster that sits idle 40% of the time, you access the exact computational power you need, when you need it, through the cloud. It's like switching from owning a fleet of trucks to using on-demand logistics services.

The GPUaaS Market Explosion: Why 2026 is the Breakthrough Year

The numbers tell a compelling story.

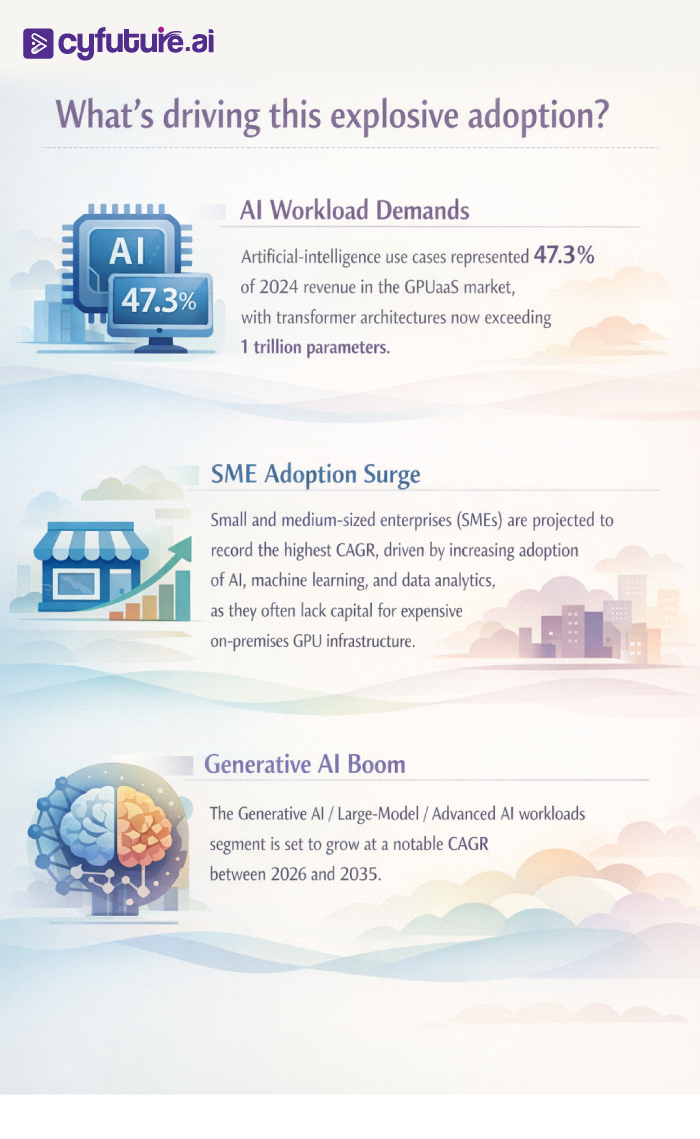

The global GPU as a Service market was estimated at USD 4,372.3 million in 2025 and is projected to reach USD 14,458.4 million by 2033, growing at a CAGR of 16.0% from 2026 to 2033.

More aggressive estimates suggest even faster growth: The global GPU as a Service market is expected to grow from USD 8.21 billion in 2025 to USD 26.62 billion by 2030 at a CAGR of 26.5%.

How GPUaaS Transforms Generative AI Development

Breaking Through the Training Time Barrier

Traditional CPU-based training? Forget about it.

Here's what changes with GPUaaS:

Speed Improvements That Matter:

- The H100 features fourth-generation Tensor Cores and a dedicated Transformer Engine with FP8 precision that provides up to 4X faster training over the prior generation for GPT-3 (175B) models.

- Benchmarks show the B200 delivers up to 77% faster throughput than the H100 and over 20 PFLOPS of FP8 performance per node.

Real-World Impact: What used to take weeks of training can now be completed in days. A chatbot model that required 14 days on traditional infrastructure can be trained in 3-4 days using GPUaaS with H100 or B200 GPUs.

The Architecture Advantage for Chatbots

Modern chatbots aren't simple rule-based systems anymore. They're sophisticated AI agents powered by:

- Large Language Models (LLMs) – Requiring billions of parameters

- Real-time inference – Processing responses in under 800ms for natural conversation flow

- Continuous learning – Adapting from user interactions

GPUs enable real-time inference for tasks like fraud detection, autonomous driving and chatbots, with their high throughput and low latency making them ideal for deploying models in production environments that require instant decision-making.

The Cost Economics: Why GPUaaS Beats Traditional Infrastructure

Let's talk numbers—because CFOs care about ROI.

Traditional On-Premises Approach:

- GPU Hardware: $25,000-$40,000 per unit × 8 GPUs = $200,000-$320,000

- Infrastructure: Data center space, cooling, power redundancy = $50,000-$100,000

- Maintenance: IT staff, driver updates, hardware management = $75,000/year

- Depreciation: 3-year lifecycle = 33% annual value loss

- Utilization Risk: Average idle time 40-60% = wasted capital

Total Year 1 Investment: $400,000-$500,000

GPUaaS Approach:

- H100 GPU: $1.77 to $13 per hour depending on the provider and configuration

- Pay-per-use flexibility: Scale from 1 GPU to 100s instantly

- Zero maintenance burden: Provider handles all infrastructure

- Latest hardware access: Automatic updates to newest GPU generations

- Perfect utilization: Pay only for active compute time

Typical Year 1 Cost for Similar Workload: $120,000-$200,000

That's 50-70% cost savings while maintaining superior performance.

40 percent of organizations say they use GPU-as-a-Service (GPUaaS) today, up from 34 percent last year. They're not making this shift because it's trendy—they're doing it because the economics are undeniable.

Also Check: GPU as a Service Pricing Models Explained: Hourly vs. Subscription

GPUaaS Accelerates Every Stage of Chatbot Development

Stage 1: Data Preprocessing and Model Selection

The Challenge: Modern chatbots require processing massive conversational datasets—often millions of user interactions containing text, context, intent labels, and entity recognition markers.

GPUaaS Solution: Machine learning workflows often involve large datasets for training, testing and validation, with GPUs offering high memory bandwidth and parallel processing to help process massive datasets quickly.

Practical Benefit: What previously took 2-3 weeks of data preparation compresses to 3-4 days with GPU-accelerated preprocessing pipelines.

Stage 2: Model Training and Fine-Tuning

The Challenge: Training conversational AI requires iterating through billions of parameters across multiple epochs. GPT-4 reportedly trained on thousands of GPUs over several weeks, with an estimated cost of $100 million.

GPUaaS Solution: Access to specialized hardware without procurement delays:

- The H100 carries 80GB of HBM3, 3.35 TB/s bandwidth, and 4th-gen Tensor Cores connected via NVLink 4.0 (900 GB/s)

- The B200 delivers up to 192GB of HBM3e, 8 TB/s bandwidth, and 5th-gen Tensor Cores supporting FP4 and FP6

Practical Benefit: Distributed training across multiple GPUs reduces training time from weeks to days, enabling faster iteration and experimentation.

Stage 3: Inference and Deployment

The Challenge: In natural human conversation, responses arrive within about 500 milliseconds, with production voice agents typically aiming for 800ms or lower end-to-end latency to maintain conversational flow.

GPUaaS Solution: Running real-time natural language processing (NLP) tasks, like customer support chatbots, can be efficiently managed with NVIDIA Triton Inference Server hosted on GPUaaS platforms.

Practical Benefit: Scalable inference infrastructure that automatically adjusts to user demand—handling 100 concurrent users or 100,000 without manual intervention.

What Makes Cyfuture AI's GPUaaS Different

At Cyfuture AI, we understand that generative AI and chatbot development isn't just about raw computational power—it's about the entire ecosystem that enables innovation.

Enterprise-Grade GPU Infrastructure

Our infrastructure delivers what AI teams actually need:

Cutting-Edge Hardware Portfolio:

- NVIDIA H100 GPUs – Industry-leading throughput for training and generative AI workloads

- NVIDIA A100 GPU Clusters – Multi-Instance GPU (MIG) capabilities for scalable AI training

- NVIDIA L40S GPUs – Perfect balance for inference and visualization tasks

Managed GPU Clusters: Expertly configured clusters interconnected via NVLink and InfiniBand, offering unmatched scalability and performance reliability for demanding enterprise workloads.

Flexible, Transparent Pricing

We don't believe in hidden costs or complex pricing tiers that require a PhD to understand.

What You Get:

- Competitive hourly rates starting from industry-leading prices

- Pay-per-use models with no long-term lock-in

- Reserved instances for predictable workloads

- Enterprise volume discounts for large-scale deployments

Real Value: Mid-market to enterprise organizations can access economical GPU infrastructure for AI and HPC projects with personalized service, without the complexity typical of hyperscale cloud providers.

Built for AI Development Teams

Cyfuture AI offers on-demand GPUaaS tailored for AI, ML, and high-performance computing workloads with flexible scaling, enabling reduced time-to-market and accelerated AI development cycles.

Our platform includes:

- Pre-configured ML frameworks – TensorFlow, PyTorch, CUDA ready to deploy

- Automated scaling – Resources adjust dynamically to workload demands

- Expert support – 24/7 assistance from GPU infrastructure specialists

- Data sovereignty – Compliance-ready infrastructure for regulated industries

The Technical Architecture: How It All Works

Infrastructure Layer

Hardware Foundation: Providers deploy high-performance GPUs, such as NVIDIA A100, H100, or AMD MI300x, in secure and geographically distributed data centers.

Orchestration Systems: Tools like Kubernetes and NVIDIA's GPU Cloud (NGC) ensure seamless deployment, scaling, and workload optimization.

Network Backbone: High-speed networking with NVLink, InfiniBand, and 100/200/400 Gigabit Ethernet prevents bottlenecks during distributed training.

Software Stack Integration

Framework Compatibility: Platforms often integrate APIs, such as TensorFlow, PyTorch, and CUDA, to streamline AI model development and training.

Development Tools:

- Jupyter notebooks for experimentation

- MLOps pipelines for production deployment

- Version control for model management

- Monitoring dashboards for performance optimization

Security and Compliance

For enterprises, security isn't optional—it's mandatory:

- Data encryption at rest and in transit

- Isolated compute environments for multi-tenant security

- Compliance certifications (SOC 2, ISO 27001, GDPR)

- Audit logging for regulatory requirements

Overcoming Common GPUaaS Challenges

Challenge 1: Supply Constraints

Global demand for HBM3E far exceeds wafer-production and TSV-stacking capacity, with SK Hynix and Micron reporting fully booked lines through 2025.

Solution: Partner with providers like Cyfuture AI that have secured GPU allocations and guaranteed capacity commitments.

Challenge 2: Cost Management

The Risk: Uncontrolled GPU usage can lead to budget overruns.

Solution:

- Implement usage monitoring and alerts

- Use reserved instances for predictable workloads

- Leverage spot instances for fault-tolerant training jobs

- Set up automated resource scaling policies

Challenge 3: Workload Optimization

The Challenge: Not all AI tasks require the same GPU power.

Solution: Match workloads to appropriate GPU tiers:

- Training: H100 or B200 for maximum throughput

- Fine-tuning: A100 or L40S for cost-performance balance

- Inference: T4 or optimized inference engines for production deployment

Read More: Buy GPU Server in India: Pricing, Warranty & Delivery

Best Practices for GPUaaS-Powered Chatbot Development

1. Start with Clear Requirements

Define your chatbot's:

- Language capabilities – Single vs. multilingual

- Domain expertise – General purpose vs. specialized (medical, legal, technical)

- Scale expectations – Concurrent users, requests per second

- Latency requirements – Response time SLAs

2. Optimize Your Training Pipeline

Data Efficiency:

- Use mixed-precision training (FP16/FP8) to reduce memory footprint

- Implement gradient accumulation for effective batch sizes

- Leverage transfer learning from pre-trained models

Compute Efficiency:

- Batch similar training jobs to maximize GPU utilization

- Use checkpointing to prevent loss from interruptions

- Monitor GPU memory usage and adjust accordingly

3. Build for Production from Day One

Scalability Planning:

- Design stateless inference services

- Implement load balancing and auto-scaling

- Use distributed caching for common queries

Monitoring and Observability:

- Track model performance metrics (accuracy, latency, throughput)

- Set up alerts for anomalies and degradation

- Implement A/B testing for model updates

4. Embrace Continuous Improvement

Modern chatbots aren't static—they evolve:

- Collect user feedback systematically

- Retrain models with new conversational data

- Fine-tune regularly to adapt to changing user needs

- Update knowledge bases with current information

The Future: What's Next for GPUaaS and Generative AI

2026 and Beyond

The global AI data center GPU market size accounted for USD 10.51 billion in 2025 and is predicted to increase from USD 12.83 billion in 2026 to approximately USD 77.15 billion by 2035, expanding at a CAGR of 22.06%.

Emerging Trends:

1. Edge AI Integration The edge AI hardware market is projected to reach $58.9 billion by 2030, up from $26.14 billion in 2025, with enterprises now processing 75% of their data at the edge.

2. Specialized AI Accelerators While NVIDIA dominates today, specialized chips for inference and specific AI workloads are emerging, offering better cost-performance for production deployments.

3. Quantum-AI Hybrid Systems The convergence of quantum computing and classical AI systems will open new possibilities for optimization and model training.

4. Sustainable AI Computing Green data centers powered by renewable energy will become standard, addressing the environmental impact of large-scale AI training.

Accelerate Your AI Innovation with Cyfuture AI

The generative AI revolution isn't waiting for anyone.

Every day you spend managing on-premises GPU infrastructure is a day your competitors are using GPUaaS to ship faster, iterate more efficiently, and capture market share.

Here's what happens when you partner with Cyfuture AI:

- Week 1: Deploy your first GPU-powered development environment

- Week 2-4: Launch initial chatbot training with pre-configured ML frameworks

- Month 2: Scale to production with auto-scaling inference infrastructure

- Month 3+: Continuously improve with ongoing model updates and optimization

The Numbers Speak:

- 77% faster training with latest GPU architectures

- 50-70% cost reduction vs. traditional infrastructure

- Zero maintenance burden – focus on AI, not hardware management

- Instant scalability – from prototype to production in days

Frequently Asked Questions

1. What is the difference between GPUaaS and traditional cloud computing?

Traditional cloud computing provides general-purpose virtual machines with CPU resources. GPUaaS specifically delivers high-performance GPU instances optimized for parallel processing workloads like AI training, inference, and scientific computing. GPUaaS platforms include specialized software stacks (CUDA, cuDNN, ML frameworks) and networking (NVLink, InfiniBand) designed for GPU-intensive tasks.

2. How much does it cost to train a chatbot using GPUaaS?

Costs vary based on model complexity, dataset size, and training duration. A small-scale chatbot (1-7B parameters) might cost $500-$2,000 to train on A100 GPUs over 2-4 days. Medium-scale models (7-13B parameters) typically range from $2,000-$8,000. Large-scale conversational AI (13B+ parameters) can cost $10,000-$100,000+ depending on requirements. GPUaaS's pay-per-use model makes these costs predictable and controllable.

3. Can I use GPUaaS for both training and inference?

Absolutely. GPUaaS providers offer GPU tiers optimized for different workloads. High-end GPUs (H100, B200) excel at training large models, while more cost-effective options (T4, L40S) are perfect for inference. Many organizations train on powerful GPUs and deploy on inference-optimized hardware to balance performance and cost.

4. How secure is my data with GPUaaS providers?

Reputable GPUaaS providers implement enterprise-grade security including data encryption (at rest and in transit), isolated compute environments, compliance certifications (SOC 2, ISO 27001, HIPAA, GDPR), and audit logging. Choose providers with documented security practices and compliance reports relevant to your industry.

5. What GPU should I choose for my chatbot project?

For training: H100 or B200 for large-scale models, A100 for medium-scale projects, or L40S for smaller fine-tuning tasks. For inference: L40S, T4, or A10 GPUs offer excellent cost-performance. Consider factors like model size (parameters and memory requirements), training time constraints, budget, and expected inference throughput.

6. How quickly can I get started with GPUaaS?

With platforms like Cyfuture AI, you can provision GPU instances within minutes. Pre-configured environments with popular ML frameworks are ready to use immediately. Total time from signup to running your first training job: typically under 1 hour.

7. Can GPUaaS handle variable workloads?

Yes, this is one of GPUaaS's key advantages. Auto-scaling policies can dynamically adjust resources based on demand—scaling up during training runs or high-traffic periods, and scaling down during idle times. You only pay for what you use, making it perfect for workloads with variable compute requirements.

8. What happens if my training job is interrupted?

Modern GPUaaS platforms support checkpointing, which saves model state at regular intervals. If an interruption occurs, training resumes from the last checkpoint rather than starting over. Most providers also offer spot/preemptible instances with automatic migration to prevent loss of progress.

9. Do I need deep technical expertise to use GPUaaS?

While some GPU and ML knowledge helps, many GPUaaS providers (including Cyfuture AI) offer managed services, pre-configured environments, and expert support. You can start with templates for common use cases (chatbot development, NLP, computer vision) and scale your expertise as your projects grow.

Author Bio:

Meghali is a tech-savvy content writer with expertise in AI, Cloud Computing, App Development, and Emerging Technologies. She excels at translating complex technical concepts into clear, engaging, and actionable content for developers, businesses, and tech enthusiasts. Meghali is passionate about helping readers stay informed and make the most of cutting-edge digital solutions.